Caffe Nets, Layers, and Blobs¶

1. caffe?¶

많이 쓰이고 있는 Deep learning framework ( deep learning을 위한 C++/ CUDA architecture )

reference Model (http://arxiv.org/pdf/1311.2524v1.pdf)

Berkely Vision and Learning Center에서 개발

Expression - 설정 값이 따로 hardcoding 되어 있지 않기 때문에 configuration 변경 만으로도 Model과 최적화에 대한 설계가 쉽다.

Speed - GPU를 통한 병렬 연산을 통해 속도가 빠르다.

Extensible code - 수많은 개발자들이 참여하고 있다.

powerful community

1.1 How to Install (for Ubuntu)¶

1.2 Nets, Layers, and Blobs¶

- Caffe 모델의 구조

1.2.1 Blobs¶

실제 데이터를 Caffe에서 알맞게 처리할 수 있도록 한 번 wrapping하는 역할.

CPU와 GPU 간의 동기화를 지원하는 역할.

lazily allocate memory

통합 메모리 인터페이스를 제공하고 image batch, 모델 parameter, 최적화 계수 등을 저장하는데 사용된다.

data blob의 경우 Number, Channel, Height, Width로 구성된 4차원 배열

Number는 data의 batch size를 의미하고 Channel은 pixel의 channel을 의미 (ex RGB = 3 channel)

parameter blob은 layer의 구성과 타입에 따라 다양함.

ex) 3개의 입력 blob을 가진 11x11 크기의 96개의 filter를 가진 convolution layer의 경우에는 blob 크기는 96 x 3 x 11 x 3

ex) 1000개의 output을 가지고 1024개의 input channel을 가지는 fully-connected layer의 경우에는 blob 크기가 1 x 1 x 1000 x 1024

1.2.1.1 Implementation Details¶

- CPU와 GPU상에 저장되는 데이터를 접근하는 방법

- const way

const Dtype* cpu_data() const;

- mutable way

Dtype* mutable_cpu_data();

- 이렇게 설계한 이유 - Blob 내부에서는 CPU와 GPU 사이의 동기화를 위해 SyncedMem이란 class를 사용하는데, CPU와 GPU 간의 data copy가 일어날 때마다 SyncedMem에서는 이를 알고 있어야 한다. 그래서 반드시 포인터를 가져오기 위한 함수를 호출하는 것을 장려하고, 직접 포인터 변수를 가져와서 변경하는 것은 금지하고 있음.

// Assuming that data are on the CPU initially, and we have a blob.

const Dtype* foo;

Dtype* bar;

foo = blob.gpu_data(); // data copied cpu->gpu.

foo = blob.cpu_data(); // no data copied since both have up-to-date contents.

bar = blob.mutable_gpu_data(); // no data copied.

// ... some operations ...

bar = blob.mutable_gpu_data(); // no data copied when we are still on GPU.

foo = blob.cpu_data(); // data copied gpu->cpu, since the gpu side has modified the data

foo = blob.gpu_data(); // no data copied since both have up-to-date contents

bar = blob.mutable_cpu_data(); // still no data copied.

bar = blob.mutable_gpu_data(); // data copied cpu->gpu.

bar = blob.mutable_cpu_data(); // data copied gpu->cpu.

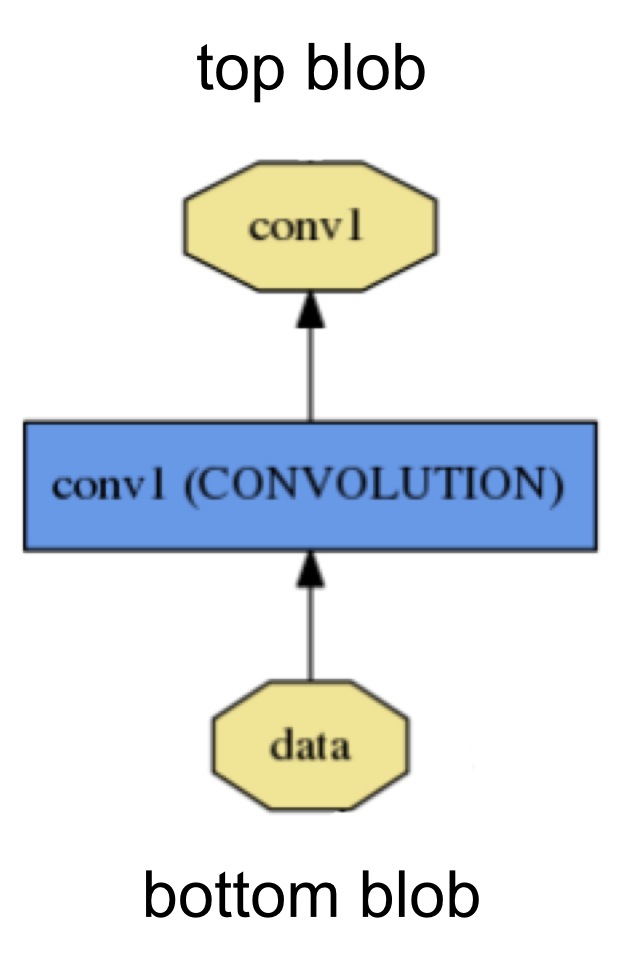

1.2.2 Layer¶

- filter, pool, rectified-linear, sigmoid ....

- http://caffe.berkeleyvision.org/tutorial/layers.html

name: "conv1"

type: CONVOLUTION

bottom: "data"

top: "conv1"

convolution_param {

num_output: 20

kernel_size: 5

stride: 1

weight_filler{

type: "xavier"

}

}

- Layer 에서는 3가지의 중요한 계산 단계를 지닌다.

- Setup : layer와 연결 관계등을 초기화 한다.(model initialization에 한 번)

- Forward : 주어진 input으로부터 output을 계산하고 top으로 전달한다.

- Backward : output 결과에 따라 다시 input으로 전달한다.(오차 수정)

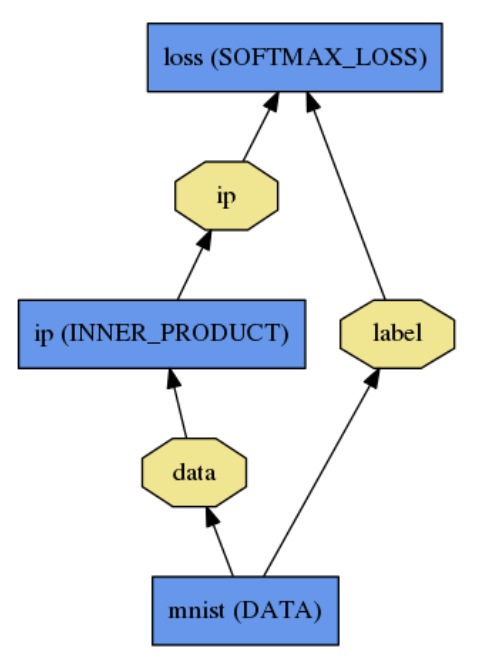

1.2.3 Net¶

- DAG(directed acyclic graph) 로 연결된 layer들의 집합.

- caffe에서는 정의를 통해 net을 생성하고 체크할 수 있다.

name: "LogReg"

layer {

name: "mnist"

type: DATA

top: "data"

top: "label"

data_param {

source: "input_leveldb"

batch_size: 64

}

}

layer {

name: "ip"

type: INNER_PRODUCT

bottom: "data"

top: "ip"

inner_product_param{

num_output: 2

}

}

layers {

name: "loss"

type: SOFTMAX_LOSS

bottom: "ip"

bottom: "label"

top: "loss"

}

!python /home/konan/caffe/python/classify.py --print_results /home/konan/caffe/examples/images/cat.jpg foo

CPU mode

WARNING: Logging before InitGoogleLogging() is written to STDERR

I0511 16:12:12.324367 153330 net.cpp:42] Initializing net from parameters:

name: "CaffeNet"

input: "data"

input_dim: 10

input_dim: 3

input_dim: 227

input_dim: 227

state {

phase: TEST

}

layer {

name: "conv1"

type: "Convolution"

bottom: "data"

top: "conv1"

convolution_param {

num_output: 96

kernel_size: 11

stride: 4

}

}

layer {

name: "relu1"

type: "ReLU"

bottom: "conv1"

top: "conv1"

}

layer {

name: "pool1"

type: "Pooling"

bottom: "conv1"

top: "pool1"

pooling_param {

pool: MAX

kernel_size: 3

stride: 2

}

}

layer {

name: "norm1"

type: "LRN"

bottom: "pool1"

top: "norm1"

lrn_param {

local_size: 5

alpha: 0.0001

beta: 0.75

}

}

layer {

name: "conv2"

type: "Convolution"

bottom: "norm1"

top: "conv2"

convolution_param {

num_output: 256

pad: 2

kernel_size: 5

group: 2

}

}

layer {

name: "relu2"

type: "ReLU"

bottom: "conv2"

top: "conv2"

}

layer {

name: "pool2"

type: "Pooling"

bottom: "conv2"

top: "pool2"

pooling_param {

pool: MAX

kernel_size: 3

stride: 2

}

}

layer {

name: "norm2"

type: "LRN"

bottom: "pool2"

top: "norm2"

lrn_param {

local_size: 5

alpha: 0.0001

beta: 0.75

}

}

layer {

name: "conv3"

type: "Convolution"

bottom: "norm2"

top: "conv3"

convolution_param {

num_output: 384

pad: 1

kernel_size: 3

}

}

layer {

name: "relu3"

type: "ReLU"

bottom: "conv3"

top: "conv3"

}

layer {

name: "conv4"

type: "Convolution"

bottom: "conv3"

top: "conv4"

convolution_param {

num_output: 384

pad: 1

kernel_size: 3

group: 2

}

}

layer {

name: "relu4"

type: "ReLU"

bottom: "conv4"

top: "conv4"

}

layer {

name: "conv5"

type: "Convolution"

bottom: "conv4"

top: "conv5"

convolution_param {

num_output: 256

pad: 1

kernel_size: 3

group: 2

}

}

layer {

name: "relu5"

type: "ReLU"

bottom: "conv5"

top: "conv5"

}

layer {

name: "pool5"

type: "Pooling"

bottom: "conv5"

top: "pool5"

pooling_param {

pool: MAX

kernel_size: 3

stride: 2

}

}

layer {

name: "fc6"

type: "InnerProduct"

bottom: "pool5"

top: "fc6"

inner_product_param {

num_output: 4096

}

}

layer {

name: "relu6"

type: "ReLU"

bottom: "fc6"

top: "fc6"

}

layer {

name: "drop6"

type: "Dropout"

bottom: "fc6"

top: "fc6"

dropout_param {

dropout_ratio: 0.5

}

}

layer {

name: "fc7"

type: "InnerProduct"

bottom: "fc6"

top: "fc7"

inner_product_param {

num_output: 4096

}

}

layer {

name: "relu7"

type: "ReLU"

bottom: "fc7"

top: "fc7"

}

layer {

name: "drop7"

type: "Dropout"

bottom: "fc7"

top: "fc7"

dropout_param {

dropout_ratio: 0.5

}

}

layer {

name: "fc8"

type: "InnerProduct"

bottom: "fc7"

top: "fc8"

inner_product_param {

num_output: 1000

}

}

layer {

name: "prob"

type: "Softmax"

bottom: "fc8"

top: "prob"

}

I0511 16:12:12.325275 153330 net.cpp:340] Input 0 -> data

I0511 16:12:12.325340 153330 layer_factory.hpp:74] Creating layer conv1

I0511 16:12:12.325369 153330 net.cpp:84] Creating Layer conv1

I0511 16:12:12.325384 153330 net.cpp:380] conv1 <- data

I0511 16:12:12.325405 153330 net.cpp:338] conv1 -> conv1

I0511 16:12:12.325429 153330 net.cpp:113] Setting up conv1

I0511 16:12:12.325574 153330 net.cpp:120] Top shape: 10 96 55 55 (2904000)

I0511 16:12:12.325608 153330 layer_factory.hpp:74] Creating layer relu1

I0511 16:12:12.325628 153330 net.cpp:84] Creating Layer relu1

I0511 16:12:12.325642 153330 net.cpp:380] relu1 <- conv1

I0511 16:12:12.325657 153330 net.cpp:327] relu1 -> conv1 (in-place)

I0511 16:12:12.325673 153330 net.cpp:113] Setting up relu1

I0511 16:12:12.325692 153330 net.cpp:120] Top shape: 10 96 55 55 (2904000)

I0511 16:12:12.325706 153330 layer_factory.hpp:74] Creating layer pool1

I0511 16:12:12.325723 153330 net.cpp:84] Creating Layer pool1

I0511 16:12:12.325737 153330 net.cpp:380] pool1 <- conv1

I0511 16:12:12.325752 153330 net.cpp:338] pool1 -> pool1

I0511 16:12:12.325770 153330 net.cpp:113] Setting up pool1

I0511 16:12:12.325799 153330 net.cpp:120] Top shape: 10 96 27 27 (699840)

I0511 16:12:12.325814 153330 layer_factory.hpp:74] Creating layer norm1

I0511 16:12:12.325836 153330 net.cpp:84] Creating Layer norm1

I0511 16:12:12.325850 153330 net.cpp:380] norm1 <- pool1

I0511 16:12:12.325865 153330 net.cpp:338] norm1 -> norm1

I0511 16:12:12.325882 153330 net.cpp:113] Setting up norm1

I0511 16:12:12.325904 153330 net.cpp:120] Top shape: 10 96 27 27 (699840)

I0511 16:12:12.325919 153330 layer_factory.hpp:74] Creating layer conv2

I0511 16:12:12.325937 153330 net.cpp:84] Creating Layer conv2

I0511 16:12:12.325949 153330 net.cpp:380] conv2 <- norm1

I0511 16:12:12.325965 153330 net.cpp:338] conv2 -> conv2

I0511 16:12:12.325983 153330 net.cpp:113] Setting up conv2

I0511 16:12:12.326581 153330 net.cpp:120] Top shape: 10 256 27 27 (1866240)

I0511 16:12:12.326609 153330 layer_factory.hpp:74] Creating layer relu2

I0511 16:12:12.326627 153330 net.cpp:84] Creating Layer relu2

I0511 16:12:12.326639 153330 net.cpp:380] relu2 <- conv2

I0511 16:12:12.326654 153330 net.cpp:327] relu2 -> conv2 (in-place)

I0511 16:12:12.326670 153330 net.cpp:113] Setting up relu2

I0511 16:12:12.326686 153330 net.cpp:120] Top shape: 10 256 27 27 (1866240)

I0511 16:12:12.326699 153330 layer_factory.hpp:74] Creating layer pool2

I0511 16:12:12.326715 153330 net.cpp:84] Creating Layer pool2

I0511 16:12:12.326728 153330 net.cpp:380] pool2 <- conv2

I0511 16:12:12.326743 153330 net.cpp:338] pool2 -> pool2

I0511 16:12:12.326760 153330 net.cpp:113] Setting up pool2

I0511 16:12:12.326777 153330 net.cpp:120] Top shape: 10 256 13 13 (432640)

I0511 16:12:12.326792 153330 layer_factory.hpp:74] Creating layer norm2

I0511 16:12:12.326807 153330 net.cpp:84] Creating Layer norm2

I0511 16:12:12.326820 153330 net.cpp:380] norm2 <- pool2

I0511 16:12:12.326836 153330 net.cpp:338] norm2 -> norm2

I0511 16:12:12.326853 153330 net.cpp:113] Setting up norm2

I0511 16:12:12.326869 153330 net.cpp:120] Top shape: 10 256 13 13 (432640)

I0511 16:12:12.326884 153330 layer_factory.hpp:74] Creating layer conv3

I0511 16:12:12.326942 153330 net.cpp:84] Creating Layer conv3

I0511 16:12:12.326956 153330 net.cpp:380] conv3 <- norm2

I0511 16:12:12.326972 153330 net.cpp:338] conv3 -> conv3

I0511 16:12:12.326988 153330 net.cpp:113] Setting up conv3

I0511 16:12:12.329548 153330 net.cpp:120] Top shape: 10 384 13 13 (648960)

I0511 16:12:12.329579 153330 layer_factory.hpp:74] Creating layer relu3

I0511 16:12:12.329597 153330 net.cpp:84] Creating Layer relu3

I0511 16:12:12.329612 153330 net.cpp:380] relu3 <- conv3

I0511 16:12:12.329627 153330 net.cpp:327] relu3 -> conv3 (in-place)

I0511 16:12:12.329643 153330 net.cpp:113] Setting up relu3

I0511 16:12:12.329659 153330 net.cpp:120] Top shape: 10 384 13 13 (648960)

I0511 16:12:12.329673 153330 layer_factory.hpp:74] Creating layer conv4

I0511 16:12:12.329689 153330 net.cpp:84] Creating Layer conv4

I0511 16:12:12.329701 153330 net.cpp:380] conv4 <- conv3

I0511 16:12:12.329716 153330 net.cpp:338] conv4 -> conv4

I0511 16:12:12.329735 153330 net.cpp:113] Setting up conv4

I0511 16:12:12.331092 153330 net.cpp:120] Top shape: 10 384 13 13 (648960)

I0511 16:12:12.331120 153330 layer_factory.hpp:74] Creating layer relu4

I0511 16:12:12.331136 153330 net.cpp:84] Creating Layer relu4

I0511 16:12:12.331151 153330 net.cpp:380] relu4 <- conv4

I0511 16:12:12.331166 153330 net.cpp:327] relu4 -> conv4 (in-place)

I0511 16:12:12.331182 153330 net.cpp:113] Setting up relu4

I0511 16:12:12.331197 153330 net.cpp:120] Top shape: 10 384 13 13 (648960)

I0511 16:12:12.331212 153330 layer_factory.hpp:74] Creating layer conv5

I0511 16:12:12.331228 153330 net.cpp:84] Creating Layer conv5

I0511 16:12:12.331240 153330 net.cpp:380] conv5 <- conv4

I0511 16:12:12.331255 153330 net.cpp:338] conv5 -> conv5

I0511 16:12:12.331271 153330 net.cpp:113] Setting up conv5

I0511 16:12:12.332605 153330 net.cpp:120] Top shape: 10 256 13 13 (432640)

I0511 16:12:12.332635 153330 layer_factory.hpp:74] Creating layer relu5

I0511 16:12:12.332658 153330 net.cpp:84] Creating Layer relu5

I0511 16:12:12.332672 153330 net.cpp:380] relu5 <- conv5

I0511 16:12:12.332689 153330 net.cpp:327] relu5 -> conv5 (in-place)

I0511 16:12:12.332705 153330 net.cpp:113] Setting up relu5

I0511 16:12:12.332722 153330 net.cpp:120] Top shape: 10 256 13 13 (432640)

I0511 16:12:12.332746 153330 layer_factory.hpp:74] Creating layer pool5

I0511 16:12:12.332765 153330 net.cpp:84] Creating Layer pool5

I0511 16:12:12.332779 153330 net.cpp:380] pool5 <- conv5

I0511 16:12:12.332798 153330 net.cpp:338] pool5 -> pool5

I0511 16:12:12.332816 153330 net.cpp:113] Setting up pool5

I0511 16:12:12.332837 153330 net.cpp:120] Top shape: 10 256 6 6 (92160)

I0511 16:12:12.332852 153330 layer_factory.hpp:74] Creating layer fc6

I0511 16:12:12.332875 153330 net.cpp:84] Creating Layer fc6

I0511 16:12:12.332890 153330 net.cpp:380] fc6 <- pool5

I0511 16:12:12.332905 153330 net.cpp:338] fc6 -> fc6

I0511 16:12:12.332922 153330 net.cpp:113] Setting up fc6

I0511 16:12:12.403771 153330 net.cpp:120] Top shape: 10 4096 (40960)

I0511 16:12:12.403841 153330 layer_factory.hpp:74] Creating layer relu6

I0511 16:12:12.403875 153330 net.cpp:84] Creating Layer relu6

I0511 16:12:12.403893 153330 net.cpp:380] relu6 <- fc6

I0511 16:12:12.403911 153330 net.cpp:327] relu6 -> fc6 (in-place)

I0511 16:12:12.403934 153330 net.cpp:113] Setting up relu6

I0511 16:12:12.403950 153330 net.cpp:120] Top shape: 10 4096 (40960)

I0511 16:12:12.403964 153330 layer_factory.hpp:74] Creating layer drop6

I0511 16:12:12.403988 153330 net.cpp:84] Creating Layer drop6

I0511 16:12:12.404001 153330 net.cpp:380] drop6 <- fc6

I0511 16:12:12.404017 153330 net.cpp:327] drop6 -> fc6 (in-place)

I0511 16:12:12.404033 153330 net.cpp:113] Setting up drop6

I0511 16:12:12.404057 153330 net.cpp:120] Top shape: 10 4096 (40960)

I0511 16:12:12.404072 153330 layer_factory.hpp:74] Creating layer fc7

I0511 16:12:12.404093 153330 net.cpp:84] Creating Layer fc7

I0511 16:12:12.404108 153330 net.cpp:380] fc7 <- fc6

I0511 16:12:12.404124 153330 net.cpp:338] fc7 -> fc7

I0511 16:12:12.404142 153330 net.cpp:113] Setting up fc7

I0511 16:12:12.436167 153330 net.cpp:120] Top shape: 10 4096 (40960)

I0511 16:12:12.436235 153330 layer_factory.hpp:74] Creating layer relu7

I0511 16:12:12.436264 153330 net.cpp:84] Creating Layer relu7

I0511 16:12:12.436281 153330 net.cpp:380] relu7 <- fc7

I0511 16:12:12.436301 153330 net.cpp:327] relu7 -> fc7 (in-place)

I0511 16:12:12.436323 153330 net.cpp:113] Setting up relu7

I0511 16:12:12.436342 153330 net.cpp:120] Top shape: 10 4096 (40960)

I0511 16:12:12.436355 153330 layer_factory.hpp:74] Creating layer drop7

I0511 16:12:12.436378 153330 net.cpp:84] Creating Layer drop7

I0511 16:12:12.436391 153330 net.cpp:380] drop7 <- fc7

I0511 16:12:12.436408 153330 net.cpp:327] drop7 -> fc7 (in-place)

I0511 16:12:12.436424 153330 net.cpp:113] Setting up drop7

I0511 16:12:12.436442 153330 net.cpp:120] Top shape: 10 4096 (40960)

I0511 16:12:12.436456 153330 layer_factory.hpp:74] Creating layer fc8

I0511 16:12:12.436473 153330 net.cpp:84] Creating Layer fc8

I0511 16:12:12.436486 153330 net.cpp:380] fc8 <- fc7

I0511 16:12:12.436502 153330 net.cpp:338] fc8 -> fc8

I0511 16:12:12.436522 153330 net.cpp:113] Setting up fc8

I0511 16:12:12.444764 153330 net.cpp:120] Top shape: 10 1000 (10000)

I0511 16:12:12.444829 153330 layer_factory.hpp:74] Creating layer prob

I0511 16:12:12.444859 153330 net.cpp:84] Creating Layer prob

I0511 16:12:12.444875 153330 net.cpp:380] prob <- fc8

I0511 16:12:12.444895 153330 net.cpp:338] prob -> prob

I0511 16:12:12.444918 153330 net.cpp:113] Setting up prob

I0511 16:12:12.444958 153330 net.cpp:120] Top shape: 10 1000 (10000)

I0511 16:12:12.444974 153330 net.cpp:169] prob does not need backward computation.

I0511 16:12:12.444988 153330 net.cpp:169] fc8 does not need backward computation.

I0511 16:12:12.445001 153330 net.cpp:169] drop7 does not need backward computation.

I0511 16:12:12.445014 153330 net.cpp:169] relu7 does not need backward computation.

I0511 16:12:12.445026 153330 net.cpp:169] fc7 does not need backward computation.

I0511 16:12:12.445039 153330 net.cpp:169] drop6 does not need backward computation.

I0511 16:12:12.445051 153330 net.cpp:169] relu6 does not need backward computation.

I0511 16:12:12.445063 153330 net.cpp:169] fc6 does not need backward computation.

I0511 16:12:12.445076 153330 net.cpp:169] pool5 does not need backward computation.

I0511 16:12:12.445088 153330 net.cpp:169] relu5 does not need backward computation.

I0511 16:12:12.445101 153330 net.cpp:169] conv5 does not need backward computation.

I0511 16:12:12.445113 153330 net.cpp:169] relu4 does not need backward computation.

I0511 16:12:12.445127 153330 net.cpp:169] conv4 does not need backward computation.

I0511 16:12:12.445138 153330 net.cpp:169] relu3 does not need backward computation.

I0511 16:12:12.445150 153330 net.cpp:169] conv3 does not need backward computation.

I0511 16:12:12.445163 153330 net.cpp:169] norm2 does not need backward computation.

I0511 16:12:12.445176 153330 net.cpp:169] pool2 does not need backward computation.

I0511 16:12:12.445188 153330 net.cpp:169] relu2 does not need backward computation.

I0511 16:12:12.445201 153330 net.cpp:169] conv2 does not need backward computation.

I0511 16:12:12.445214 153330 net.cpp:169] norm1 does not need backward computation.

I0511 16:12:12.445226 153330 net.cpp:169] pool1 does not need backward computation.

I0511 16:12:12.445238 153330 net.cpp:169] relu1 does not need backward computation.

I0511 16:12:12.445253 153330 net.cpp:169] conv1 does not need backward computation.

I0511 16:12:12.445266 153330 net.cpp:205] This network produces output prob

I0511 16:12:12.445297 153330 net.cpp:447] Collecting Learning Rate and Weight Decay.

I0511 16:12:12.445319 153330 net.cpp:217] Network initialization done.

I0511 16:12:12.445333 153330 net.cpp:218] Memory required for data: 62497920

E0511 16:12:13.004518 153330 upgrade_proto.cpp:609] Attempting to upgrade input file specified using deprecated transformation parameters: /home/konan/caffe/python/../models/bvlc_reference_caffenet/bvlc_reference_caffenet.caffemodel

I0511 16:12:13.004586 153330 upgrade_proto.cpp:612] Successfully upgraded file specified using deprecated data transformation parameters.

E0511 16:12:13.004601 153330 upgrade_proto.cpp:614] Note that future Caffe releases will only support transform_param messages for transformation fields.

E0511 16:12:13.004616 153330 upgrade_proto.cpp:618] Attempting to upgrade input file specified using deprecated V1LayerParameter: /home/konan/caffe/python/../models/bvlc_reference_caffenet/bvlc_reference_caffenet.caffemodel

I0511 16:12:13.263543 153330 upgrade_proto.cpp:626] Successfully upgraded file specified using deprecated V1LayerParameter

(10, 3, 227, 227)

Loading file: /home/konan/caffe/examples/images/cat.jpg

Classifying 1 inputs.

Done in 1.60 s.

[('tabby', '0.27933'), ('tiger cat', '0.21915'), ('Egyptian cat', '0.16064'), ('lynx', '0.12844'), ('kit fox', '0.05155')]

Saving results into foo