#!/usr/bin/env python

# coding: utf-8

# # Final Project - James Quacinella

#

# Fo the final project, I will look at the follower network of one of the think tank Twitter account and perform clustering to find groups of associated accounts. Looking at the clusters, I hope to identify what joins them by performing some NLP tasks on the account's profile contents.

#

# ## Step 1 - Crawl Twitter for Followers

#

# The next section of code does not run in the notebook, but is a copy of the crawler code created for this project. It will take a single account, get the first level followers, and then grab the 'second-level' followers. Those second level follower are only added if they were nodes in the first level (so we focus on the main account, not other accounts tangentially related).

# In[ ]:

#import graphlab as gl

import pickle

import twitter

import logging

import time

from collections import defaultdict

### Setup a console and file logger

logger = logging.getLogger('crawler')

logger.setLevel(logging.DEBUG)

fh = logging.FileHandler('crawler.log')

fh.setLevel(logging.INFO)

ch = logging.StreamHandler()

ch.setLevel(logging.INFO)

formatter = logging.Formatter('%(asctime)s - %(name)s - %(levelname)s - %(message)s')

ch.setFormatter(formatter)

fh.setFormatter(formatter)

logger.addHandler(ch)

logger.addHandler(fh)

### Setup signals to make sure API calls only take 60s at most

from functools import wraps

import errno

import os

import signal

class TimeoutError(Exception):

pass

def timeout(seconds=60, error_message=os.strerror(errno.ETIME)):

def decorator(func):

def _handle_timeout(signum, frame):

raise TimeoutError(error_message)

def wrapper(*args, **kwargs):

signal.signal(signal.SIGALRM, _handle_timeout)

signal.alarm(seconds)

try:

result = func(*args, **kwargs)

finally:

signal.alarm(0)

return result

return wraps(func)(wrapper)

return decorator

@timeout()

def getFollowers(api, follower):

''' Function that will get a user's list of followers from an api object.

NOTE: the decorator ensures that this only runs for 60s at most. '''

# return api.GetFollowerIDs(follower)

return api.GetFriendIDs(follower)

### Twitter API

# Lets create our list of api OAuth parameters

API_TOKENS = [

{"consumer_key": 'yp4wi4FASXbsRKa6JxYqzhUlH',

"consumer_secret": 'Wkh1d5ygAOp4Bp65syFzHRN4xQsS8O4FvU3zHWosX8NXCqMpcl',

"access_token_key": '16562593-F6lRFe7iyoQEahezhPmaI64oInHZD0LNpcIbbq7Wy',

"access_token_secret": 'weregYL8n6DI7yZy9pkizIJ78rH2GY02Do9jvpTe7rCey',

"requests_timeout": 60},

{"consumer_key": 'NsNYFG9LtZV2XMyigPaCKVyVz',

"consumer_secret": '4J1vlowybipqXnSrKgLBvmzPmwqx71uHN32noljTgDLS2xQNfI',

"access_token_key": '16562593-NCuQWVnpzcnB55w7VLdoCkdobdUQBRDJKjIPXAksP',

"access_token_secret": 'nX9OksrYQxj0jBXYJTkUjlX5mZh4rZljfVRXtSM3Tjc8c',

"requests_timeout": 60},

{"consumer_key": 'ZcAMGe2MUcnTO9ATCIo563SHN',

"consumer_secret": 'dJAB7mBfoYyx27Yccbmzz98GtNigAA67Ish9Y1NjN2wNznciM1',

"access_token_key": '16562593-AmaoKVLEYL3o8rVUS3b6u4PUbVPTI6BPsyaqCdwxY',

"access_token_secret": '8pjYJCFWTErJlb2WSkLwsYNoptVazQQs95JAvIU8JApUA',

"requests_timeout": 60},

{"consumer_key": 'avZpjObqQN9vue2Y4gu9zIF9X',

"consumer_secret": 'Ka6WCj3fyon5yGgf5YJIIl8nVcLcUh5YT99N58qy8qv4kfaMbc',

"access_token_key": '16562593-VNuGD09Cr29ZlzNCWnV5MOujU7PsexSwfTgfKQNqC',

"access_token_secret": '9P3hB3qDb9zPDFCUhWU16N4CMXPwHacl6HJbCc0EuGj7s',

"requests_timeout": 60},

{"consumer_key": 'sQ9H5NKteroNZSWvIrkSWvXR0',

"consumer_secret": 'lC0ttZKdIZhhJAE1I5RxMxdjpSiADQCVUnHS7LbtfVmI2pz2F2',

"access_token_key": '16562593-4LOk7QkXWD0boF01BmZ6NP2oPtHmDZ1OVJ883aANG',

"access_token_secret": 'JJ85qMqzVowN1KdQ6w4YlhJB9YF9eWbw6SGbxQoU6gvne',

"requests_timeout": 60},

{"consumer_key": 'DHppZ2LG3iYj8vEx7ibRRLN35',

"consumer_secret": 'wdTQeyp7ZNDN7ne40IriRw7Ah1J8cAi2OIlw4MVtgpq5MMKjYE',

"access_token_key": '16562593-WN8zvEWAxVfJPrneMwUjDoVQw0geuLckOOJqFimsC',

"access_token_secret": 'ZgVi2onPB3RPGtRmPBs6QXymIMgXwJHUOQycesp64S0Hp',

"requests_timeout": 60},

{"consumer_key": 'lIgtfdkC2WmN7XAcicrGygQBp',

"consumer_secret": '2D9WIJN2MIPwFpMeIGcP6vWjQC8vvy7G5ZlHMSH1F1CsgWGKfz',

"access_token_key": '16562593-7lhPpeZNNAGoQQJnqcnTtBiGq1O52XMZ4CMeVqXiY',

"access_token_secret": 'WKRBQsr36MMB2EpCcZLr89ik0MSJfPoBORCKu9E1hw96I',

"requests_timeout": 60},

{"consumer_key": '1XFu2urZzoMoC5sadXAjA7IoQ',

"consumer_secret": 'FrJOlHfNLp3M7ejJWiO5k74E9ai6L5EzQJ45HmlsUINbh8qUUi',

"access_token_key": '16562593-Texko6g7VyCwhNUfxBDoJKJl4058hpvQkqAYWRKpi',

"access_token_secret": 'ISZCTvN6bYJVaJ3Z2iidQObTzE2pxkINBLi0WWe9Ab2Zv',

"requests_timeout": 60},

{"consumer_key": 'r8Bvdm6I8QrRPuVzP4VtRYpqd',

"consumer_secret": 'CzA8u8M8nDiDCCrSzCsXpR3SyTGCaLppDWbdTxSg78ZKgtKkhh',

"access_token_key": '16562593-I3l0ZSmfZbMxIQ2NbiiM2eDMA4KNzFmFBeUkWxunR',

"access_token_secret": '9HkILP4kSMF0hgvsB126jpoUzsRXETYMlSM0YSKb2yMJH',

"requests_timeout": 60},

{"consumer_key": 'NmMjfP1Zt3n2VDZ15X7SDGM6G',

"consumer_secret": 'j9JBx7HUbMpcDnFteiIAAgHSoA8idlqQ20A1xbvnMrqMrOHQ1n',

"access_token_key": '16562593-zUNyMUdO9JnSIstmTrqdyHHmX2lpv9NqkQxGC8faP',

"access_token_secret": 'DEeHvLjTXlxNGmqDntXOK0cJCX08cnpg0btoRXWATW3X2',

"requests_timeout": 60}

]

# Now create a list of twitter API objects

apis = []

for token in API_TOKENS:

apis.append( twitter.Api(consumer_key=token['consumer_key'],

consumer_secret=token['consumer_secret'],

access_token_key=token['access_token_key'],

access_token_secret=token['access_token_secret'],

requests_timeout=60))

# The account id / screen name we want followers from

account_screen_name = 'fairmediawatch'

account_id = '54679731'

# Keep track of nodes connected to account, and all edges we need in the graph

nodes = set()

edges = defaultdict(set)

# Try to load first level followers from pickle;

# otherwise, generate them from a single API call and save via pickle

try:

logger.info("Loading followers for %s" % account_screen_name)

f = open("following1", "rb")

following = pickle.load(f)

except Exception as e:

logger.info("Failed. Generating followers for %s" % account_screen_name)

following = api.GetFriendIDs(screen_name=account_screen_name)

pickle.dump(following, open("following1", "wb"))

# Try to load the nodes and first level edges from pickle;

# otherwise generate them from the 'following' list and save

try:

logger.info("Loading nodes and edges for depth = 1, for %s" % account_screen_name)

n = open("nodes.follow1.set", "rb")

e = open("edges.follow1.dict", "rb")

nodes = pickle.load(n)

edges = pickle.load(e)

except Exception as e:

logger.info("Failed. Generating nodes and edges for depth = 1, for %s" % account_screen_name)

for follower in following:

nodes.add(follower)

edges[account_id].add(follower)

pickle.dump(nodes, open("nodes.follow1.set", "wb"))

pickle.dump(edges, open("edges.follow1.dict", "wb"))

### Crawling for Depth2

# Index the api list, and start from the first api object

api_idx = 0

api = apis[api_idx]

# Some accounts give us issues (either too many followers or no permissions)

blacklist= [74323323, 43532023, 19608297, 25757924, 240369959, 173634807, 17008482, 142143804]

api_updated = False

# It is nice to start from a point in the list, instead of from the beginning

starting_point = 142143804

if starting_point:

starting_point_idx = following.index(starting_point)

following_iter = range(starting_point_idx, len(following))

else:

following_iter = range(len(following))

# Try loading second layer of followers from pickle, otherwise start from scratch

try:

f = open("edges.follow2.dict", "rb")

edges = pickle.load(f)

logger.info("Loaded edges.follow2 into memory!")

except Exception as e:

logger.info("Starting from SCRATCH: did not load edges.follow2 into memory!")

pass

# For each follower of the main account ...

for follower_idx in following_iter:

follower = following[follower_idx]

success = False

# ... check if they are on the blacklist; if so, skip

if follower in blacklist:

logger.info("Skipping due to blacklist")

continue

# Otherwise, attempt to get list of their followers

followers_depth2_list = []

while not success:

try:

logger.info("Getting followers for follower %s" % follower)

followers_depth2_list = getFollowers(api, follower)

success = True

except TimeoutError as e:

# If api call takes too long, move on

logger.info("Timeout after 60s for follower %d" % follower)

success = True # technically not a success but setting flag so next loop moves on

continue

except Exception as e:

# IF we get here, then we hit API limits

logger.info("API Exception %s; api-idx = %d" % (str(e), api_idx))

# Are we at the begining of api list?

# IF so, dump edges so far via pickle and sleep

if api_updated and api_idx % len(API_TOKENS) == 0 and api_idx >= len(API_TOKENS):

logger.info("Save edges to pickle file for follower = %s" % follower)

pickle.dump(edges, open("edges.follow2.dict", "wb"))

logger.info("Sleeping ...")

time.sleep(60)

api_updated = False

# Otherwise, move on to the next api object and try again

else:

api_idx += 1

api = apis[api_idx % len(API_TOKENS)]

api_updated = True

# After getting the followers, find the intersection of those followers

# with those of the first-level followers and add to edge dict

if followers_depth2_list:

logger.info("Adding followers to the graph")

edges[follower].update(nodes.intersection(followers_depth2_list))

# Write out final list of edges via pickle

logger.info("Save edges to pickle file for follower = %s" % follower)

pickle.dump(edges, open("edges.follow2.dict", "wb"))

# Instead of running the above, lets just load everything via pickle:

# In[2]:

import pickle

n = open("nodes.follow1.set", "rb")

nodes = pickle.load(n)

e = open("edges.follow2.dict", "rb")

edges = pickle.load(e)

f = open("following1", "rb")

following = pickle.load(f)

# ## Step 2 - Generate Graph from Crawl

#

# First, we generate CSV files so we can load data into GraphLab Create.

# In[3]:

# Hide some silly output

import logging

logging.getLogger("requests").setLevel(logging.WARNING)

logging.getLogger("urllib3").setLevel(logging.WARNING)

# Import everything we need

import graphlab as gl

# Generate CSVs from the previous crawl

# TODO

f = open('vertices.csv', 'w')

f.write('id\n')

for node in nodes:

f.write(str(node) + "\n")

f.close()

f = open('edges.csv', 'w')

f.write('src,dst,relation\n')

for node, followers in edges.iteritems():

for follower in followers:

f.write('%s,%s,%s\n' % (follower, node, 'follows'))

f.close()

# Next, let us use these CSV files and load them into a graph object called g:

# In[4]:

# Load Data

gvertices = gl.SFrame.read_csv('vertices.csv')

gedges = gl.SFrame.read_csv('edges.csv')

# Create graph

g = gl.SGraph()

g = g.add_vertices(vertices=gvertices, vid_field='id')

g = g.add_edges(edges=gedges, src_field='src', dst_field='dst')

g = g.add_edges(edges=gedges, src_field='dst', dst_field='src')

# Lets try to visualize the graph!

# In[28]:

# Visualize graph?

gl.canvas.set_target('browser')

g.show(vlabel="id")

# Looks like its too large of a graph to display.

#

# ## Central / Important Nodes

#

# Lets use pagerank to find important nodes in the network:

# In[5]:

pr = gl.pagerank.create(g)

pr.get('pagerank').topk(column_name='pagerank')

# ## Import The Graph to iGraph

#

# Next we will load the graph data into igrpah and perform clustering to find communities

# In[140]:

from igraph import *

# Create empty graph

twitter_graph = Graph(directed=False)

# Setup the nodes

for node in nodes:

if isinstance(node, int):

twitter_graph.add_vertex(name=str(node))

# In[142]:

# Setup the edges

for user in edges:

for follower in edges[user]:

try:

twitter_graph.add_edge(str(follower), str(user))

except Exception as e:

print user, follower

print e

break

# In[143]:

# Add the 'ego' edges

following = pickle.load(open("following1", "rb"))

for node in following:

twitter_graph.add_edge(str(node), "54679731")

# In[145]:

# for v in twitter_graph.vs.select(name_eq="54679731"):

# print v

# for e in twitter_graph.es.select(_source=v.index): print e

# for e in twitter_graph.es.select(_target=533): print e

# In[102]:

# for v in twitter_graph.vs:

# if len(twitter_graph.es.select(_source=v.index)) == 0 and len(twitter_graph.es.select(_target=v.index)) == 0:

# print v

# In[ ]:

pickle.dump(twitter_graph, open("twitter_graph", "wb"))

# In[19]:

# Load twitter grapg to prevent running the above

twitter_graph = pickle.load(open("twitter_graph", "rb"))

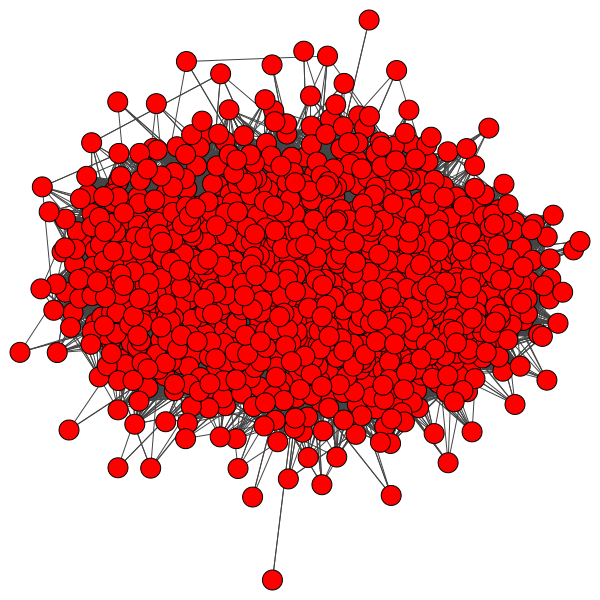

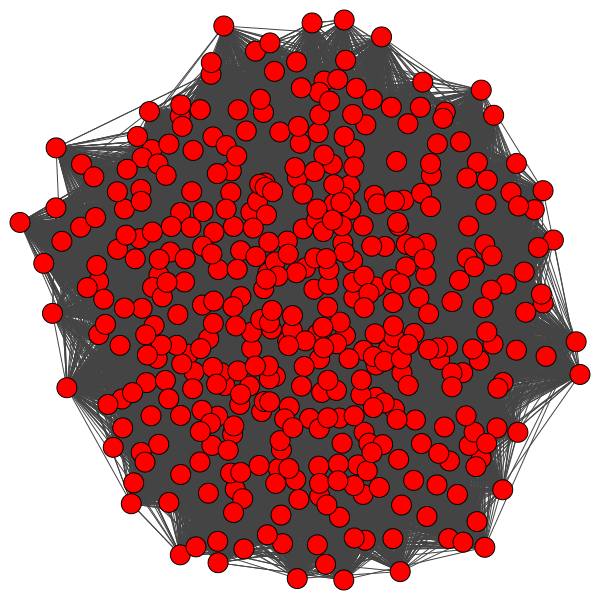

# ## Display the Graph

# In[ ]:

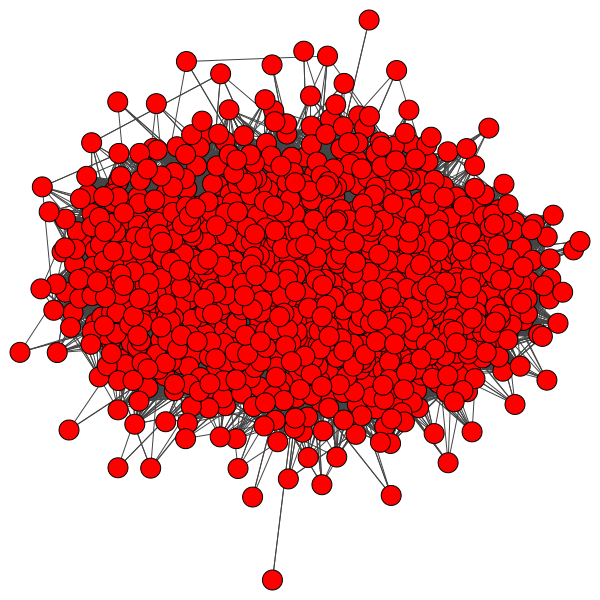

layout = twitter_graph.layout_drl()

plt1 = plot(twitter_graph, 'graph.drl.png', layout = layout)

# In[257]:

from IPython.display import HTML

s = """ """

h = HTML(s); h

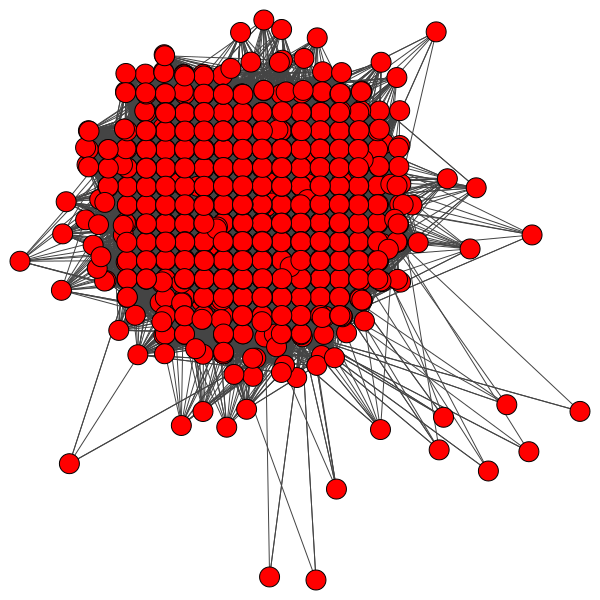

# In[214]:

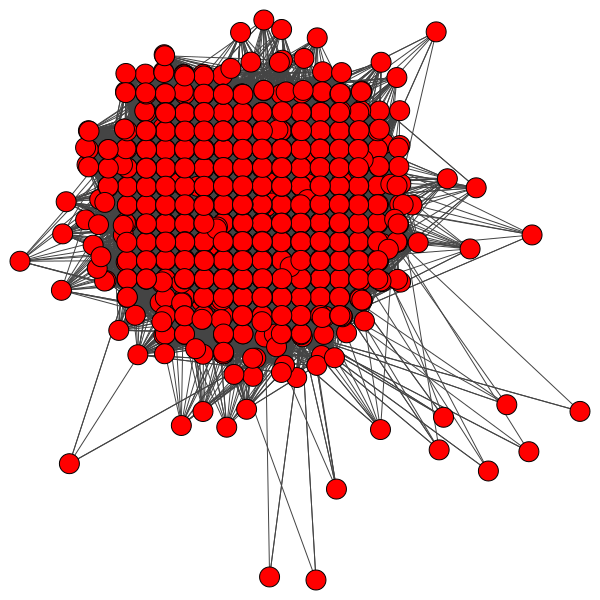

layout = twitter_graph.layout("graphopt")

plt2 = plot(twitter_graph, 'graph.graphopt.png', layout = layout)

# In[258]:

s = """

"""

h = HTML(s); h

# In[214]:

layout = twitter_graph.layout("graphopt")

plt2 = plot(twitter_graph, 'graph.graphopt.png', layout = layout)

# In[258]:

s = """ """

h = HTML(s); h

# In[227]:

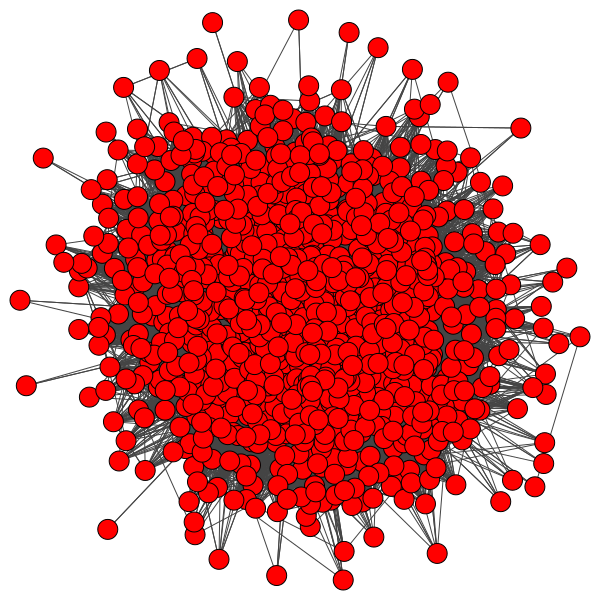

layout = twitter_graph.layout("lgl")

plt2 = plot(twitter_graph, 'graph.lgl.png', layout = layout)

# In[260]:

s = """

"""

h = HTML(s); h

# In[227]:

layout = twitter_graph.layout("lgl")

plt2 = plot(twitter_graph, 'graph.lgl.png', layout = layout)

# In[260]:

s = """ """

h = HTML(s); h

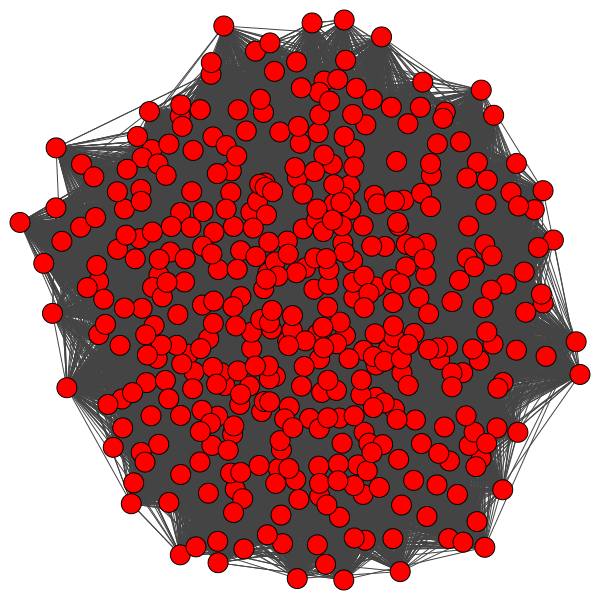

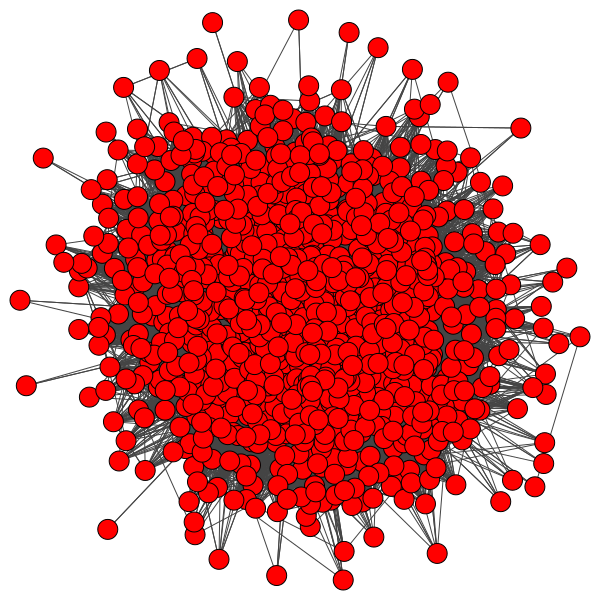

# Lets trim down the graph to only large nodes:

# In[237]:

# https://lists.nongnu.org/archive/html/igraph-help/2012-11/msg00047.html

twitter_graph2 = twitter_graph.copy()

nodes = twitter_graph2.vs(_degree_lt=200)

twitter_graph2.es.select(_within=nodes).delete()

twitter_graph2.vs(_degree_lt=200).delete()

layout = twitter_graph2.layout_drl()

plt1 = plot(twitter_graph2, 'graph2.drl.png', layout = layout)

# In[261]:

s = """

"""

h = HTML(s); h

# Lets trim down the graph to only large nodes:

# In[237]:

# https://lists.nongnu.org/archive/html/igraph-help/2012-11/msg00047.html

twitter_graph2 = twitter_graph.copy()

nodes = twitter_graph2.vs(_degree_lt=200)

twitter_graph2.es.select(_within=nodes).delete()

twitter_graph2.vs(_degree_lt=200).delete()

layout = twitter_graph2.layout_drl()

plt1 = plot(twitter_graph2, 'graph2.drl.png', layout = layout)

# In[261]:

s = """ """

h = HTML(s); h

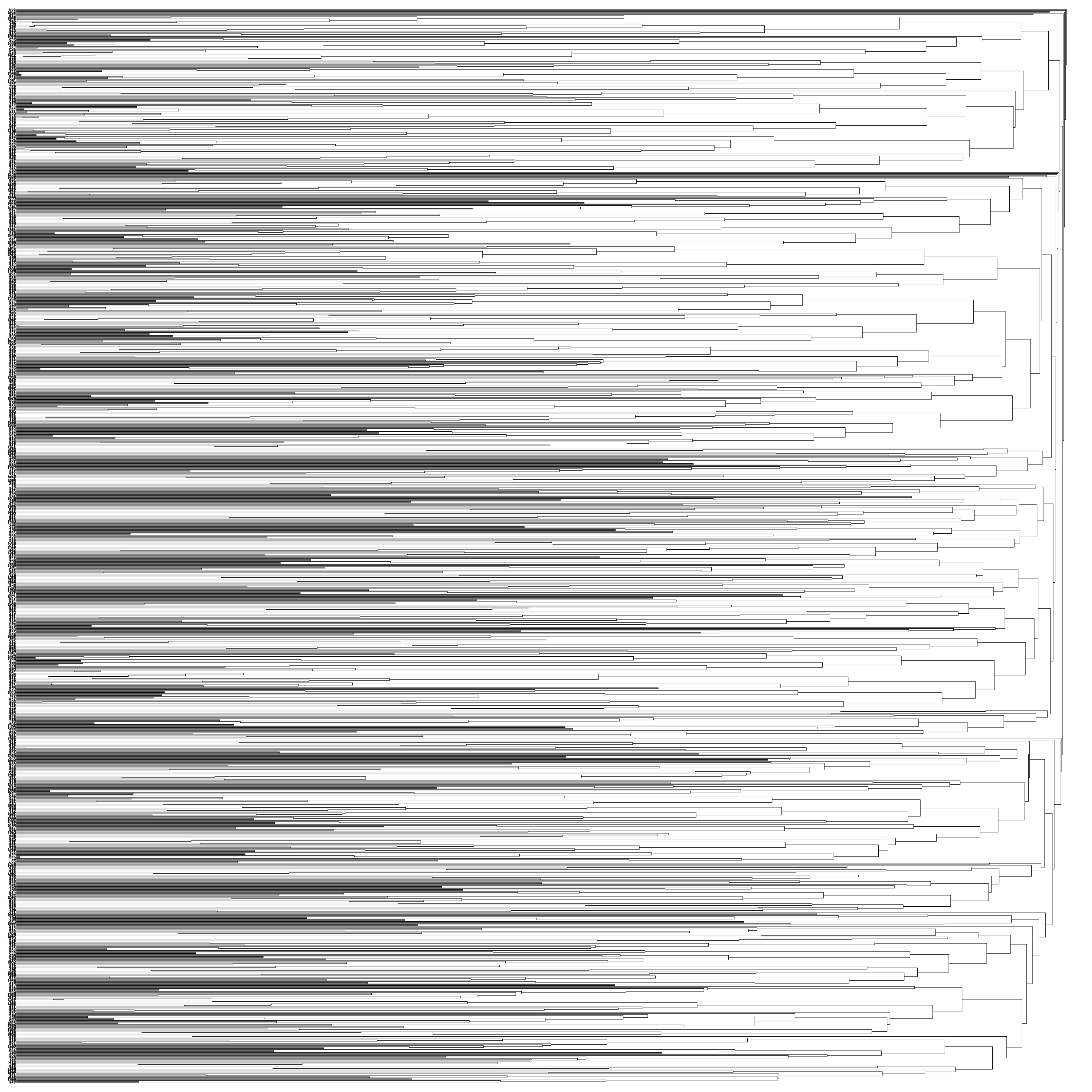

# ## Clustering

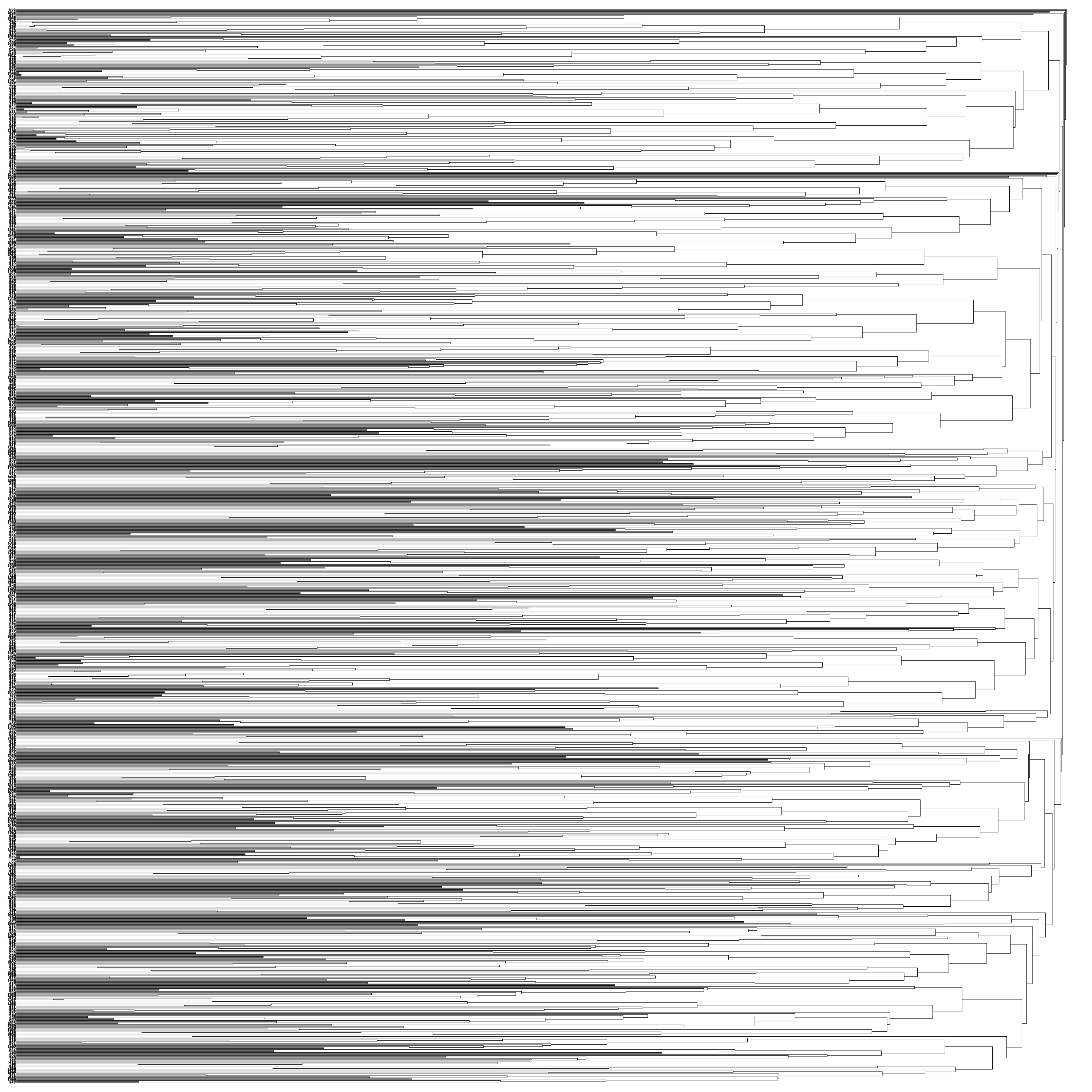

# First, lets run the walktrap community algorithm, which seems to produce a heirarchical clustering:

# In[243]:

wc = twitter_graph.community_walktrap()

plot(wc, 'cluster.walktrap.png', bbox=(3000,3000))

# In[266]:

s = """

"""

h = HTML(s); h

# ## Clustering

# First, lets run the walktrap community algorithm, which seems to produce a heirarchical clustering:

# In[243]:

wc = twitter_graph.community_walktrap()

plot(wc, 'cluster.walktrap.png', bbox=(3000,3000))

# In[266]:

s = """ """

h = HTML(s); h

# In[22]:

eigen = twitter_graph.community_leading_eigenvector()

plot(eigen, 'cluster.eigen.test.png', mark_groups=True, bbox=(5000,5000))

# In[270]:

s = """

"""

h = HTML(s); h

# In[22]:

eigen = twitter_graph.community_leading_eigenvector()

plot(eigen, 'cluster.eigen.test.png', mark_groups=True, bbox=(5000,5000))

# In[270]:

s = """ """

h = HTML(s); h

# Still messy, lets try doing the same thing but on the smaller graph:

# In[238]:

eigen2 = twitter_graph2.community_leading_eigenvector()

plot(eigen2, 'cluster2.eigen.png', mark_groups=True, bbox=(5000,5000))

# In[269]:

s = """

"""

h = HTML(s); h

# Still messy, lets try doing the same thing but on the smaller graph:

# In[238]:

eigen2 = twitter_graph2.community_leading_eigenvector()

plot(eigen2, 'cluster2.eigen.png', mark_groups=True, bbox=(5000,5000))

# In[269]:

s = """ """

h = HTML(s); h

# ## Bios of Twitter Users

#

# Now that we have the graph communities, lets crawl twitter for the bios on each user. I will not reproduce the code, as it is mostly a copy of the above twitter crawl, using a different API call. Check biocrawl.py in the repo. We'll load the data from pickle:

# In[23]:

bios = pickle.load(open("bios", "rb"))

from collections import defaultdict

documents = defaultdict(str)

for v_idx, cluster in zip(range(len(eigen.membership)), eigen.membership):

twitter_id = int(twitter_graph.vs[v_idx].attributes()['name'])

if twitter_id in bios:

documents[cluster] += "\n%s" % bios[ twitter_id ]

# ### Important Nodes from PageRank

#

# Lets look at the important nodes in the network and their associated bios:

# In[61]:

import prettytable

pts = []

for cluster in set(eigen.membership):

# Create a pretty table of tweet contents and any expanded urls

pt = prettytable.PrettyTable(["Rank", "Bio", "Cluster ID"])

pt.align["Bio"] = "l" # Left align bio

pt.max_width = 60

pt.padding_width = 1 # One space between column edges and contents (default)

pts.append(pt)

# Loop thru top 100 page-rank'ed nodes

x = pr.get('pagerank')

for row in x.topk(column_name='pagerank', k=100):

if row['__id'] in bios:

vidx = twitter_graph.vs.select(name_eq=str(row['__id']))[0].index

clusterid = eigen.membership[vidx]

pts[clusterid].add_row([row['pagerank'], bios[row['__id']].split("\n")[0], clusterid ])

# Lets see the results!

for pt in pts:

print pt

# Looking at the above, I think I can come up with explanations of the clusters just by looking at the important nodes as found by page rank:

#

# - Cluster 0: Media personalities

# - This is a little tougher to gauge, but cluster 0 is more oriented towards editors and personalities, while the others tend to be organizations or institutional accounts

# - Cluster 1: Independent media, 'far-left' media outlets

# - Amy Goodman (democracy now), muckreads, #Occupy, GritTV, Truthout

# - Notice how this is a smaller group than the others

# - Cluster 2: Mainstream Liberal organizations or media personalities

# - HuffPost, NY Times, CNN, MSNBC, Bill Moyers, New Yorker

# - Cluster 3: people / journalists involved with civil liberties, human rights and whistleblowing

# - FOIA, PGP, Whistleblower, secrecy

# ### Bigram Analysis

#

# Lets do a bigram analysis on the documents that created from a combination from user bio's and their latest tweet, using a custom stop word filter and filtering for tokens of length of 3 or more:

# In[93]:

import string

import nltk

from nltk.collocations import *

from nltk.tokenize import RegexpTokenizer

from nltk.corpus import stopwords

# Custom stopwords, mostly twitter slang and other internet rubbish

my_stopwords = stopwords.words('english')

my_stopwords = my_stopwords + ['http', 'https', 'bit', 'ly', 'co', 'rt', 'rts', 'com', 'org', 'dot', 'go', 'via', 'follow', 'us', 'follow', 'retweet', 'also', 'run']

def preProcess(text):

text = text.lower()

tokenizer = RegexpTokenizer(r'\w+')

tokens = tokenizer.tokenize(text)

filtered_words = [w for w in tokens if not w in my_stopwords and not w.isdigit() and len(w) > 2]

return " ".join(filtered_words)

def getBigrams(content, threshold=5):

tokens = nltk.wordpunct_tokenize(preProcess(content))

bigram_measures = nltk.collocations.BigramAssocMeasures()

finder = BigramCollocationFinder.from_words(tokens)

finder.apply_freq_filter(threshold)

scored = finder.score_ngrams(bigram_measures.raw_freq)

return sorted([ (bigram, score) for (bigram, score) in scored ], key=lambda t: t[1], reverse=True)

# In[94]:

bigrams = []

for clusteridx in documents:

bigrams.append(getBigrams(documents[clusteridx], threshold=2))

# Create a pretty table of tweet contents and any expanded urls

bigram_pt = prettytable.PrettyTable(["Cluster 0", "Cluster 1", "Cluster 2", "Cluster 3"])

bigram_pt.align["Cluster 0"] = "l"

bigram_pt.align["Cluster 1"] = "l"

bigram_pt.align["Cluster 2"] = "l"

bigram_pt.align["Cluster 3"] = "l"

bigram_pt.max_width = 60

bigram_pt.padding_width = 1 # One space between column edges and contents (default)

for idx in range(len(bigrams[0])):

bigram_pt.add_row([" ".join(bigram[idx][0]) for bigram in bigrams])

# Lets see the results!

print bigram_pt

# Looking through the bigrams for each cluster seems to help support the outline above of the different clusters. To expand on the outline below:

#

# - Cluster 0: Media personalities

# - executive director, contributing writer , senior fellow, contributing editor

# - Cluster 1: Independent media, 'far-left' media outlets, senior editor, staff writer

# - social justice, media democracy, independent media , media justice, news analysis , digital media, nvestigative reporting

# - Cluster 2: Mainstream Liberal organizations or media personalities

# - new york times, new yorker, washington post, daliy kos, washington correspondent

# - Cluster 3: people / journalists involved with civil liberties, human rights and whistleblowing

# - Bigrams: human rights , national security , foreign policy, civil liberties, digital rights, pgp(!)

#

# ### Future Considerations

#

# - Using TF-IDF to make sure bigrams or tokens that appear in all documents are weighed less

# In[ ]:

"""

h = HTML(s); h

# ## Bios of Twitter Users

#

# Now that we have the graph communities, lets crawl twitter for the bios on each user. I will not reproduce the code, as it is mostly a copy of the above twitter crawl, using a different API call. Check biocrawl.py in the repo. We'll load the data from pickle:

# In[23]:

bios = pickle.load(open("bios", "rb"))

from collections import defaultdict

documents = defaultdict(str)

for v_idx, cluster in zip(range(len(eigen.membership)), eigen.membership):

twitter_id = int(twitter_graph.vs[v_idx].attributes()['name'])

if twitter_id in bios:

documents[cluster] += "\n%s" % bios[ twitter_id ]

# ### Important Nodes from PageRank

#

# Lets look at the important nodes in the network and their associated bios:

# In[61]:

import prettytable

pts = []

for cluster in set(eigen.membership):

# Create a pretty table of tweet contents and any expanded urls

pt = prettytable.PrettyTable(["Rank", "Bio", "Cluster ID"])

pt.align["Bio"] = "l" # Left align bio

pt.max_width = 60

pt.padding_width = 1 # One space between column edges and contents (default)

pts.append(pt)

# Loop thru top 100 page-rank'ed nodes

x = pr.get('pagerank')

for row in x.topk(column_name='pagerank', k=100):

if row['__id'] in bios:

vidx = twitter_graph.vs.select(name_eq=str(row['__id']))[0].index

clusterid = eigen.membership[vidx]

pts[clusterid].add_row([row['pagerank'], bios[row['__id']].split("\n")[0], clusterid ])

# Lets see the results!

for pt in pts:

print pt

# Looking at the above, I think I can come up with explanations of the clusters just by looking at the important nodes as found by page rank:

#

# - Cluster 0: Media personalities

# - This is a little tougher to gauge, but cluster 0 is more oriented towards editors and personalities, while the others tend to be organizations or institutional accounts

# - Cluster 1: Independent media, 'far-left' media outlets

# - Amy Goodman (democracy now), muckreads, #Occupy, GritTV, Truthout

# - Notice how this is a smaller group than the others

# - Cluster 2: Mainstream Liberal organizations or media personalities

# - HuffPost, NY Times, CNN, MSNBC, Bill Moyers, New Yorker

# - Cluster 3: people / journalists involved with civil liberties, human rights and whistleblowing

# - FOIA, PGP, Whistleblower, secrecy

# ### Bigram Analysis

#

# Lets do a bigram analysis on the documents that created from a combination from user bio's and their latest tweet, using a custom stop word filter and filtering for tokens of length of 3 or more:

# In[93]:

import string

import nltk

from nltk.collocations import *

from nltk.tokenize import RegexpTokenizer

from nltk.corpus import stopwords

# Custom stopwords, mostly twitter slang and other internet rubbish

my_stopwords = stopwords.words('english')

my_stopwords = my_stopwords + ['http', 'https', 'bit', 'ly', 'co', 'rt', 'rts', 'com', 'org', 'dot', 'go', 'via', 'follow', 'us', 'follow', 'retweet', 'also', 'run']

def preProcess(text):

text = text.lower()

tokenizer = RegexpTokenizer(r'\w+')

tokens = tokenizer.tokenize(text)

filtered_words = [w for w in tokens if not w in my_stopwords and not w.isdigit() and len(w) > 2]

return " ".join(filtered_words)

def getBigrams(content, threshold=5):

tokens = nltk.wordpunct_tokenize(preProcess(content))

bigram_measures = nltk.collocations.BigramAssocMeasures()

finder = BigramCollocationFinder.from_words(tokens)

finder.apply_freq_filter(threshold)

scored = finder.score_ngrams(bigram_measures.raw_freq)

return sorted([ (bigram, score) for (bigram, score) in scored ], key=lambda t: t[1], reverse=True)

# In[94]:

bigrams = []

for clusteridx in documents:

bigrams.append(getBigrams(documents[clusteridx], threshold=2))

# Create a pretty table of tweet contents and any expanded urls

bigram_pt = prettytable.PrettyTable(["Cluster 0", "Cluster 1", "Cluster 2", "Cluster 3"])

bigram_pt.align["Cluster 0"] = "l"

bigram_pt.align["Cluster 1"] = "l"

bigram_pt.align["Cluster 2"] = "l"

bigram_pt.align["Cluster 3"] = "l"

bigram_pt.max_width = 60

bigram_pt.padding_width = 1 # One space between column edges and contents (default)

for idx in range(len(bigrams[0])):

bigram_pt.add_row([" ".join(bigram[idx][0]) for bigram in bigrams])

# Lets see the results!

print bigram_pt

# Looking through the bigrams for each cluster seems to help support the outline above of the different clusters. To expand on the outline below:

#

# - Cluster 0: Media personalities

# - executive director, contributing writer , senior fellow, contributing editor

# - Cluster 1: Independent media, 'far-left' media outlets, senior editor, staff writer

# - social justice, media democracy, independent media , media justice, news analysis , digital media, nvestigative reporting

# - Cluster 2: Mainstream Liberal organizations or media personalities

# - new york times, new yorker, washington post, daliy kos, washington correspondent

# - Cluster 3: people / journalists involved with civil liberties, human rights and whistleblowing

# - Bigrams: human rights , national security , foreign policy, civil liberties, digital rights, pgp(!)

#

# ### Future Considerations

#

# - Using TF-IDF to make sure bigrams or tokens that appear in all documents are weighed less

# In[ ]:

"""

h = HTML(s); h

# In[214]:

layout = twitter_graph.layout("graphopt")

plt2 = plot(twitter_graph, 'graph.graphopt.png', layout = layout)

# In[258]:

s = """

"""

h = HTML(s); h

# In[214]:

layout = twitter_graph.layout("graphopt")

plt2 = plot(twitter_graph, 'graph.graphopt.png', layout = layout)

# In[258]:

s = """ """

h = HTML(s); h

# In[227]:

layout = twitter_graph.layout("lgl")

plt2 = plot(twitter_graph, 'graph.lgl.png', layout = layout)

# In[260]:

s = """

"""

h = HTML(s); h

# In[227]:

layout = twitter_graph.layout("lgl")

plt2 = plot(twitter_graph, 'graph.lgl.png', layout = layout)

# In[260]:

s = """ """

h = HTML(s); h

# Lets trim down the graph to only large nodes:

# In[237]:

# https://lists.nongnu.org/archive/html/igraph-help/2012-11/msg00047.html

twitter_graph2 = twitter_graph.copy()

nodes = twitter_graph2.vs(_degree_lt=200)

twitter_graph2.es.select(_within=nodes).delete()

twitter_graph2.vs(_degree_lt=200).delete()

layout = twitter_graph2.layout_drl()

plt1 = plot(twitter_graph2, 'graph2.drl.png', layout = layout)

# In[261]:

s = """

"""

h = HTML(s); h

# Lets trim down the graph to only large nodes:

# In[237]:

# https://lists.nongnu.org/archive/html/igraph-help/2012-11/msg00047.html

twitter_graph2 = twitter_graph.copy()

nodes = twitter_graph2.vs(_degree_lt=200)

twitter_graph2.es.select(_within=nodes).delete()

twitter_graph2.vs(_degree_lt=200).delete()

layout = twitter_graph2.layout_drl()

plt1 = plot(twitter_graph2, 'graph2.drl.png', layout = layout)

# In[261]:

s = """ """

h = HTML(s); h

# ## Clustering

# First, lets run the walktrap community algorithm, which seems to produce a heirarchical clustering:

# In[243]:

wc = twitter_graph.community_walktrap()

plot(wc, 'cluster.walktrap.png', bbox=(3000,3000))

# In[266]:

s = """

"""

h = HTML(s); h

# ## Clustering

# First, lets run the walktrap community algorithm, which seems to produce a heirarchical clustering:

# In[243]:

wc = twitter_graph.community_walktrap()

plot(wc, 'cluster.walktrap.png', bbox=(3000,3000))

# In[266]:

s = """ """

h = HTML(s); h

# In[22]:

eigen = twitter_graph.community_leading_eigenvector()

plot(eigen, 'cluster.eigen.test.png', mark_groups=True, bbox=(5000,5000))

# In[270]:

s = """

"""

h = HTML(s); h

# In[22]:

eigen = twitter_graph.community_leading_eigenvector()

plot(eigen, 'cluster.eigen.test.png', mark_groups=True, bbox=(5000,5000))

# In[270]:

s = """ """

h = HTML(s); h

# Still messy, lets try doing the same thing but on the smaller graph:

# In[238]:

eigen2 = twitter_graph2.community_leading_eigenvector()

plot(eigen2, 'cluster2.eigen.png', mark_groups=True, bbox=(5000,5000))

# In[269]:

s = """

"""

h = HTML(s); h

# Still messy, lets try doing the same thing but on the smaller graph:

# In[238]:

eigen2 = twitter_graph2.community_leading_eigenvector()

plot(eigen2, 'cluster2.eigen.png', mark_groups=True, bbox=(5000,5000))

# In[269]:

s = """ """

h = HTML(s); h

# ## Bios of Twitter Users

#

# Now that we have the graph communities, lets crawl twitter for the bios on each user. I will not reproduce the code, as it is mostly a copy of the above twitter crawl, using a different API call. Check biocrawl.py in the repo. We'll load the data from pickle:

# In[23]:

bios = pickle.load(open("bios", "rb"))

from collections import defaultdict

documents = defaultdict(str)

for v_idx, cluster in zip(range(len(eigen.membership)), eigen.membership):

twitter_id = int(twitter_graph.vs[v_idx].attributes()['name'])

if twitter_id in bios:

documents[cluster] += "\n%s" % bios[ twitter_id ]

# ### Important Nodes from PageRank

#

# Lets look at the important nodes in the network and their associated bios:

# In[61]:

import prettytable

pts = []

for cluster in set(eigen.membership):

# Create a pretty table of tweet contents and any expanded urls

pt = prettytable.PrettyTable(["Rank", "Bio", "Cluster ID"])

pt.align["Bio"] = "l" # Left align bio

pt.max_width = 60

pt.padding_width = 1 # One space between column edges and contents (default)

pts.append(pt)

# Loop thru top 100 page-rank'ed nodes

x = pr.get('pagerank')

for row in x.topk(column_name='pagerank', k=100):

if row['__id'] in bios:

vidx = twitter_graph.vs.select(name_eq=str(row['__id']))[0].index

clusterid = eigen.membership[vidx]

pts[clusterid].add_row([row['pagerank'], bios[row['__id']].split("\n")[0], clusterid ])

# Lets see the results!

for pt in pts:

print pt

# Looking at the above, I think I can come up with explanations of the clusters just by looking at the important nodes as found by page rank:

#

# - Cluster 0: Media personalities

# - This is a little tougher to gauge, but cluster 0 is more oriented towards editors and personalities, while the others tend to be organizations or institutional accounts

# - Cluster 1: Independent media, 'far-left' media outlets

# - Amy Goodman (democracy now), muckreads, #Occupy, GritTV, Truthout

# - Notice how this is a smaller group than the others

# - Cluster 2: Mainstream Liberal organizations or media personalities

# - HuffPost, NY Times, CNN, MSNBC, Bill Moyers, New Yorker

# - Cluster 3: people / journalists involved with civil liberties, human rights and whistleblowing

# - FOIA, PGP, Whistleblower, secrecy

# ### Bigram Analysis

#

# Lets do a bigram analysis on the documents that created from a combination from user bio's and their latest tweet, using a custom stop word filter and filtering for tokens of length of 3 or more:

# In[93]:

import string

import nltk

from nltk.collocations import *

from nltk.tokenize import RegexpTokenizer

from nltk.corpus import stopwords

# Custom stopwords, mostly twitter slang and other internet rubbish

my_stopwords = stopwords.words('english')

my_stopwords = my_stopwords + ['http', 'https', 'bit', 'ly', 'co', 'rt', 'rts', 'com', 'org', 'dot', 'go', 'via', 'follow', 'us', 'follow', 'retweet', 'also', 'run']

def preProcess(text):

text = text.lower()

tokenizer = RegexpTokenizer(r'\w+')

tokens = tokenizer.tokenize(text)

filtered_words = [w for w in tokens if not w in my_stopwords and not w.isdigit() and len(w) > 2]

return " ".join(filtered_words)

def getBigrams(content, threshold=5):

tokens = nltk.wordpunct_tokenize(preProcess(content))

bigram_measures = nltk.collocations.BigramAssocMeasures()

finder = BigramCollocationFinder.from_words(tokens)

finder.apply_freq_filter(threshold)

scored = finder.score_ngrams(bigram_measures.raw_freq)

return sorted([ (bigram, score) for (bigram, score) in scored ], key=lambda t: t[1], reverse=True)

# In[94]:

bigrams = []

for clusteridx in documents:

bigrams.append(getBigrams(documents[clusteridx], threshold=2))

# Create a pretty table of tweet contents and any expanded urls

bigram_pt = prettytable.PrettyTable(["Cluster 0", "Cluster 1", "Cluster 2", "Cluster 3"])

bigram_pt.align["Cluster 0"] = "l"

bigram_pt.align["Cluster 1"] = "l"

bigram_pt.align["Cluster 2"] = "l"

bigram_pt.align["Cluster 3"] = "l"

bigram_pt.max_width = 60

bigram_pt.padding_width = 1 # One space between column edges and contents (default)

for idx in range(len(bigrams[0])):

bigram_pt.add_row([" ".join(bigram[idx][0]) for bigram in bigrams])

# Lets see the results!

print bigram_pt

# Looking through the bigrams for each cluster seems to help support the outline above of the different clusters. To expand on the outline below:

#

# - Cluster 0: Media personalities

# - executive director, contributing writer , senior fellow, contributing editor

# - Cluster 1: Independent media, 'far-left' media outlets, senior editor, staff writer

# - social justice, media democracy, independent media , media justice, news analysis , digital media, nvestigative reporting

# - Cluster 2: Mainstream Liberal organizations or media personalities

# - new york times, new yorker, washington post, daliy kos, washington correspondent

# - Cluster 3: people / journalists involved with civil liberties, human rights and whistleblowing

# - Bigrams: human rights , national security , foreign policy, civil liberties, digital rights, pgp(!)

#

# ### Future Considerations

#

# - Using TF-IDF to make sure bigrams or tokens that appear in all documents are weighed less

# In[ ]:

"""

h = HTML(s); h

# ## Bios of Twitter Users

#

# Now that we have the graph communities, lets crawl twitter for the bios on each user. I will not reproduce the code, as it is mostly a copy of the above twitter crawl, using a different API call. Check biocrawl.py in the repo. We'll load the data from pickle:

# In[23]:

bios = pickle.load(open("bios", "rb"))

from collections import defaultdict

documents = defaultdict(str)

for v_idx, cluster in zip(range(len(eigen.membership)), eigen.membership):

twitter_id = int(twitter_graph.vs[v_idx].attributes()['name'])

if twitter_id in bios:

documents[cluster] += "\n%s" % bios[ twitter_id ]

# ### Important Nodes from PageRank

#

# Lets look at the important nodes in the network and their associated bios:

# In[61]:

import prettytable

pts = []

for cluster in set(eigen.membership):

# Create a pretty table of tweet contents and any expanded urls

pt = prettytable.PrettyTable(["Rank", "Bio", "Cluster ID"])

pt.align["Bio"] = "l" # Left align bio

pt.max_width = 60

pt.padding_width = 1 # One space between column edges and contents (default)

pts.append(pt)

# Loop thru top 100 page-rank'ed nodes

x = pr.get('pagerank')

for row in x.topk(column_name='pagerank', k=100):

if row['__id'] in bios:

vidx = twitter_graph.vs.select(name_eq=str(row['__id']))[0].index

clusterid = eigen.membership[vidx]

pts[clusterid].add_row([row['pagerank'], bios[row['__id']].split("\n")[0], clusterid ])

# Lets see the results!

for pt in pts:

print pt

# Looking at the above, I think I can come up with explanations of the clusters just by looking at the important nodes as found by page rank:

#

# - Cluster 0: Media personalities

# - This is a little tougher to gauge, but cluster 0 is more oriented towards editors and personalities, while the others tend to be organizations or institutional accounts

# - Cluster 1: Independent media, 'far-left' media outlets

# - Amy Goodman (democracy now), muckreads, #Occupy, GritTV, Truthout

# - Notice how this is a smaller group than the others

# - Cluster 2: Mainstream Liberal organizations or media personalities

# - HuffPost, NY Times, CNN, MSNBC, Bill Moyers, New Yorker

# - Cluster 3: people / journalists involved with civil liberties, human rights and whistleblowing

# - FOIA, PGP, Whistleblower, secrecy

# ### Bigram Analysis

#

# Lets do a bigram analysis on the documents that created from a combination from user bio's and their latest tweet, using a custom stop word filter and filtering for tokens of length of 3 or more:

# In[93]:

import string

import nltk

from nltk.collocations import *

from nltk.tokenize import RegexpTokenizer

from nltk.corpus import stopwords

# Custom stopwords, mostly twitter slang and other internet rubbish

my_stopwords = stopwords.words('english')

my_stopwords = my_stopwords + ['http', 'https', 'bit', 'ly', 'co', 'rt', 'rts', 'com', 'org', 'dot', 'go', 'via', 'follow', 'us', 'follow', 'retweet', 'also', 'run']

def preProcess(text):

text = text.lower()

tokenizer = RegexpTokenizer(r'\w+')

tokens = tokenizer.tokenize(text)

filtered_words = [w for w in tokens if not w in my_stopwords and not w.isdigit() and len(w) > 2]

return " ".join(filtered_words)

def getBigrams(content, threshold=5):

tokens = nltk.wordpunct_tokenize(preProcess(content))

bigram_measures = nltk.collocations.BigramAssocMeasures()

finder = BigramCollocationFinder.from_words(tokens)

finder.apply_freq_filter(threshold)

scored = finder.score_ngrams(bigram_measures.raw_freq)

return sorted([ (bigram, score) for (bigram, score) in scored ], key=lambda t: t[1], reverse=True)

# In[94]:

bigrams = []

for clusteridx in documents:

bigrams.append(getBigrams(documents[clusteridx], threshold=2))

# Create a pretty table of tweet contents and any expanded urls

bigram_pt = prettytable.PrettyTable(["Cluster 0", "Cluster 1", "Cluster 2", "Cluster 3"])

bigram_pt.align["Cluster 0"] = "l"

bigram_pt.align["Cluster 1"] = "l"

bigram_pt.align["Cluster 2"] = "l"

bigram_pt.align["Cluster 3"] = "l"

bigram_pt.max_width = 60

bigram_pt.padding_width = 1 # One space between column edges and contents (default)

for idx in range(len(bigrams[0])):

bigram_pt.add_row([" ".join(bigram[idx][0]) for bigram in bigrams])

# Lets see the results!

print bigram_pt

# Looking through the bigrams for each cluster seems to help support the outline above of the different clusters. To expand on the outline below:

#

# - Cluster 0: Media personalities

# - executive director, contributing writer , senior fellow, contributing editor

# - Cluster 1: Independent media, 'far-left' media outlets, senior editor, staff writer

# - social justice, media democracy, independent media , media justice, news analysis , digital media, nvestigative reporting

# - Cluster 2: Mainstream Liberal organizations or media personalities

# - new york times, new yorker, washington post, daliy kos, washington correspondent

# - Cluster 3: people / journalists involved with civil liberties, human rights and whistleblowing

# - Bigrams: human rights , national security , foreign policy, civil liberties, digital rights, pgp(!)

#

# ### Future Considerations

#

# - Using TF-IDF to make sure bigrams or tokens that appear in all documents are weighed less

# In[ ]: